On “Robots Learn to How to Move By Watching Themselves”

AIVO recently caught up with Yuhang Hu, PhD student at Columbia University. We talked about his work with the AI Institute for Dynamic Systems (DynamicsAI). Check out this excerpt from our interview:

AIVO: Yuhang, you’re working with DynamicsAI on a project to help robots learn how to move by watching themselves. What prompted you to delve into this research area specifically?

Yuhang: I’ve always been curious about how humans and animals learn to move and interact with the world—not through instructions, but by seeing themselves, trying things out, and adjusting. That kind of self-learning feels really natural to us, but robots usually need detailed programming or external supervision to do even simple tasks.

When I started working with robots, I wanted to see if we could make them learn more like we do—by watching themselves and figuring things out on their own. So with the DynamicsAI project, we’re teaching robots to understand how their own bodies move just from observation, like building a sense of self. It’s exciting because it means robots can adapt when something changes—like if a joint wears down or they pick up something heavy—without needing to be reprogrammed. I believe this kind of flexibility and independence is a foundational capability for the next generation of robots.

AIVO: Can you give us some details about your research?

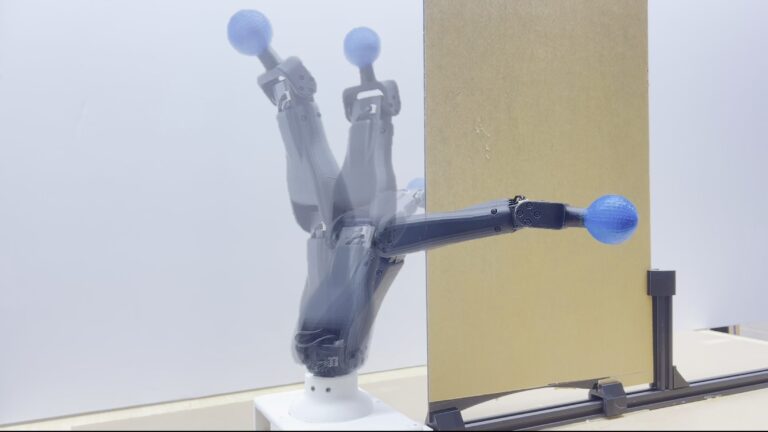

In our lab, a creative machines lab, we have different kinds of robots. And we use a learning framework, which we call self-supervised learning.

We create self-models for a different kind of robot, and try to create a model for the robot to do things itself. This is instead of creating a linear function or using pre-programmed hard code for the robot to do some simple task. So it’s a different way.

We use deep neural networks as a representation for the robot, kinematics, and dynamics.

AIVO: Of the five top applications of your research, which do you believe is the most imminent?

Yuhang: We presented Free-Form Kinematic Self-Model (FFKSM), which allows the robot to build a kinematic self-representation only from real-world vision data. No explicit simulations or pre-engineered physics parameters are required. The system uses multiple deep neural networks to predict and verify its own shape and movement.

Essentially, it’s like a robot learning how to move through a reflection in the mirror. This work has multiple applications.

The top five applications I can see:

- Teaching robots to build escalations by themselves: Instead of relying on manually engineered simulations based on the robot’s kinematics, dynamics, and morphology, our system allows the robot to generate its own internal simulation directly from real-world visual data.

- Collision-free motion for complex shapes: This approach is especially useful for collision-free motion planning in robots with complex or changing body structures, enabling them to safely navigate and interact with their environment in real time.

Because the robot is learning from real-world data, it knows how it looks during movement. I think that’s quite powerful. This can be deployed for robot’s body structures as they evolve throughout their whole life. - Lifelong learning and adaptation: It keeps updating its model over time, which means it can adapt as it changes—like adding new tools or joints.

For lifelong learning machines or those with a self-model, the robot’s kinematics and dynamics can be the model. As the robot keeps updating such models, it can even adapt when it’s damaged or broken. - Damage recovery: If something breaks, it can sense the issue and re-learn how to move without needing external repair or reprogramming.

Once the robot can figure out the difference between the normal controller and the current controller, it can detect when a binomial state happens. It can keep learning from a broken state or a damaged state. - Reusable backbone that generalizes across robot types: Spatial representation for Vision-Language Models (VLMs) is the most important one! It works across different degrees of freedom and robot morphologies — whether it’s a fixed-base robot arm or a mobile, reconfigurable system.

Because the robot learns a unified self-representation from vision alone, it builds a spatial understanding of its own body and movements. We believe this can act as a kind of “kinematic foundation model”—a reusable backbone that can generalize across robot types.This foundation becomes especially valuable when integrated with VLMs. Currently, most VLMs lack physical embodiment—they can describe actions or spatial relations, but don’t truly “understand” the robot’s shape, reach, or limits. By embedding kinematic awareness into the VLM pipeline, the system can be more physically grounded, even across robots with different configurations.

AIVO: What’s the role of DynamicsAI in your project?

Yuhang: DynamicsAI has played a really crucial role in this project. It’s been a great place to exchange ideas and stay updated on the latest in AI and robotics. We’ve had the chance to share experiences both in optimizing model performance and in brainstorming new ideas in machine learning (ML).

AIVO: How do you work with their team to move your project forward?

The collaboration with DynamicsAI has been very inspiring. Everyone brings experience in deploying large-scale ML systems, and we always share our experience in deploying such systems. That’s helped all of us make the models more efficient and robust.

Looking ahead, I’m excited to keep working in this space and creating more collaborations — particularly in building foundational models for dynamics understanding through vision. There’s a lot of potential there.

AIVO: How did you find out about the opportunity to have a project with DynamicsAI?

Yuhang: I was introduced to the opportunity by my advisor, Professor Hod Lipson. Early in my PhD, I was already deeply interested in the intersection of robotics and AI, especially how robots can learn and adapt on their own. When Professor Lipson invited me to connect with DynamicsAI, it felt like a perfect fit. It gave me the chance to work on real-world problems in a cutting-edge group.

AIVO: You’re studying robotics and AI at Columbia University. What are some ways you plan to use your PhD after you graduate?

Yuhang: After graduation, I plan to continue working in robotics and AI—either by leading research in a lab or building a company focused on next-generation humanoid robots.

I believe there’s huge potential in developing robots that can understand their own bodies, perceive the world to learn, and interact with humans intuitively. Long-term, I want to contribute to shaping how embodied AI becomes part of everyday life.

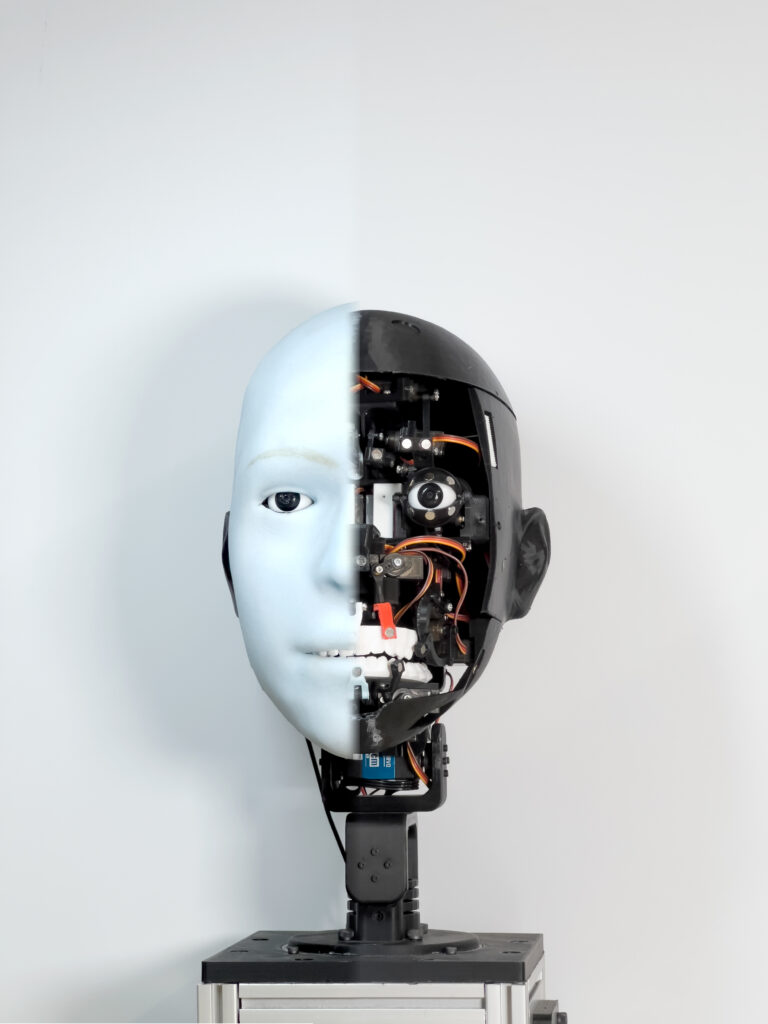

AIVO: You also had a project with DynamicsAI that was called “Human-Robot Facial Coexpression.” What prompted you to study robot facial expression?

Yuhang: I think facial expression is quite important in human-robot interaction, especially to convey emotion.

Facial expressions play a huge role in human communication, and yet most robots today are expressionless or feel uncanny. I wanted to explore how we could build robots that can not only recognize emotions but also respond with their own facial expressions in time – definitely in a way that feels natural and emotionally engaging.

AIVO: Can you give us some details about your research? What is the goal of your project and/or its practical or real-world use?

Yuhang: We developed a robot with a soft, expressive face driven by 26 actuators. Inside, two neural networks work together: one produces the robot’s own facial expression, and the other predicts the emotional state of the human.

The goal of this project is to create truly natural interactions between humans and robots—ones that feel life-like.

We currently have verbal communication tools like ChatGPT, and it’s super powerful. But with the lack of nonverbal communications skills, robots have to learn these skills. They should learn a number of communication skills. When we combine these two things together, we can create truly natural interactions between humans and robots.

Just like the first time many of us talked to ChatGPT and we were surprised by how real and responsive it felt, I want people to have that same feeling when they interact face-to-face with a robot, especially a humanoid robot. Because humanoid robots will, I think, eventually live in our daily lives because our world is built for a human-like shape.

And so if such robots are in our daily life, then they definitely need a better communication platform or head – a head that can create expressive emotion. I think it’s really important.

AIVO: What’s the role of DynamicsAI in this project? How do you work with their team to move your project forward?

Yuhang: We have a lot of research seminars every year.

And for the first project, the idea is about how to create dynamic models for soft robotics. Right now, we have a face scheme that is soft and controlled parallelly by 26 motors. So it’s actually very hard to control problems. And with people in DynamicsAI, we all collaborate together. We share our ideas and brainstorm. We got a lot of insights from this group!

A sincere thank you to Yuhang for taking time out to talk with us! We’re so excited about your accomplishments, and we’re looking forward to more great discoveries as you progress in your career!

Learn more about Yuhang’s work

- Teaching robots to build simulations of themselves (subscription required)

- Human-robot facial coexpression

About DynamicsAI

DynamicsAI aims to develop the next generation of advanced ML tools for controlling complex dynamic physical systems by discovering physically interpretable and physics-constrained data-driven models through optimal sensor selection and placement.